GDPR-compliant Process

We collect, store and process participant data in accordance with GDPR requirements. Our external data protection officer is a sparring partner and a vigilant authority for all data protection aspects.

ISO-certified Hosting

A German company certified according to ISO-27001 is responsible for storing the data and operating the data center. This guarantees the highest technical and organisational measures to protect participant data.

Data Processing on your Behalf

YOU own all data – WE, as your data processor, have clear responsibilities, which are regulated in a corresponding order processing contract.

Visibility Differentiated

Far from all data that is collected from participants is meant to be seen by everybody. Depending on the criticality, data is only visible for certain roles … or not. Any visibility of their information is always transparent to the participants.

Encrypted Transmission, Pseudonymised Storage

Participant information in online questionnaires is transmitted via SSL encryption and stored in the database in pseudonymised form. A direct correlation to an individual participant is therefore impossible.

Constantly put to the Test

In order to pass corporate audit committees’ standards for data protection and IT security, we regularly and intensively confront ourselves with the requirements and circumstances – up to and including pen tests. We have been successfully tested and approved by various DAX companies.

Built-in Trust

TRANSPARENT

Our matching algorithm is not a ‘black box’: We carefully define the underlying criteria and their impact on the matching process together with our clients. We make it transparent to participants which data is requested for what purpose and what matches are based on.

BIAS SENSITIVE

Our matching algorithm maintains neutrality: When we collaboratively design the questionnaire, we think about the perspectives of all those involved in order to avoid bias – in other words, to match participants neutrally and free of pre-determinations and filter ‘bubbles’.

SELF-DETERMINED

Participants define the preferred characteristics of their match in a self-determined manner, instead of being matched across the board according to the “like with like” principle. Only the information deliberately made available for the matching, no other data from online profiles or similar, is used.

Algorithms & AI

With great power comes great responsibility (Spiderman)

We believe that especially when it comes to superpowers, moral responsibility is particularly important. The more algorithm-based software intervenes in our lives, the more indispensable it is to judge it according to ethical as well as technical standards. This only works if it is made transparent during its development and is conceived from different perspectives. As long as it is a ‘black box’ guarded by development departments and works according to decision-making patterns of a few participants, it will display the same errors and weaknesses as the underlying human decisions. The question is therefore not (any longer) IF algorithm-based applications are needed – it is about WHICH ethical standards are needed in the development of algorithms and self-learning AI so that they work in the interests of EVERYONE.

With CHEMISTREE, Rosmarie Steininger has forged her own path to responsible, people-centered AI. It is her mission to inform public opinion beyond in-house corporate practice and industry sectors, raise awareness and literally do educational work. For what is needed is an open, critical and, above all, universally comprehensible (!) exchange about the functionality and side effects of algorithm-controlled software – instead of media scare tactics and corporate secrecy. To this end, Rosmarie Steininger is involved in a variety of socio-political projects:

AI Experimentation Space

Under the paradigm of human-centered AI, the Federal Ministry of Labor and Social Affairs is funding eleven so-called AI learning and experimentation spaces as part of its New Quality of Work initiative.. CHEMISTREE is the initiator and consortium partner of one of them:KIDD – KI im Dienste der Diversität (AI for Diversity) aims to think of and try out new rules for the development of algorithm-based software. In order to systematically prevent distortions and discrimination, diverse perspectives should be taken into account as early as the development phase – long before a ‘finished’ digital system is introduced and used in the company. Therefore, not only classic IT development departments are involved, but also a heterogeneous group of employees, works and staff councils as well experts.

The KIDD consortium partners will test the consequences and challenges associated with this, and the extent to which such a procedure can be standardised over the course of three years on various practical projects. The goal: A model process for the introduction of non-discriminatory AI and resulting criteria that can be used in other companies and for other issues relating to digital systems.

Background

As a key technology, AI will be a key influence on the future world of work. Putting people at the centre, making developments and the use of AI transparent – there are many levels the Federal Ministry of Labor and Social Affairs ( BMAS ) is working on this. With itsInitiative Neue Qualität der Arbeit (INQA) – New Quality of Work Initiative it supports and encourages companies and employees to proactively approach and help shape the digital transformation of the world of work.

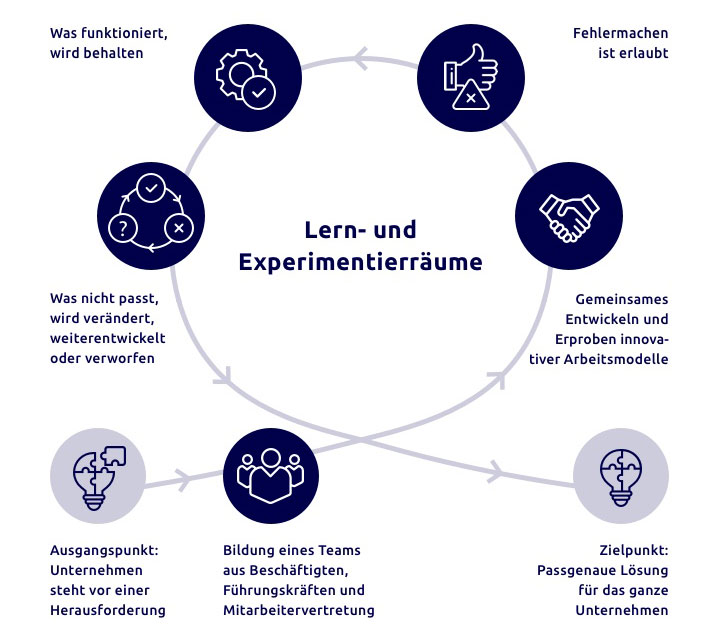

Part of this initiative are the INQA Learning & Experimentation Spaces. Financially supported by the BMAS, they open up project-dependent, protected spaces for the cooperating companies in order to iteratively develop and test new working models. Employees and interest groups are closely involved throughout the entire process. Personal experiences and learning outcomes are shared with a broader public.

© INQA.de

The Participants

The consortium brings together 8 companies and partner organisations of different sizes and industries. They are coordinated by the nexus Institute for Cooperation Management and Interdisciplinary Research. The Technical University Berlin is responsible for the scientific evaluation of the research project.

![]()

CHEMISTREE has been tasked with ‘Algorithms and AI’ – creating transpanrency and basic knowledge. At the same time, we implement the onboarding of new employees at Q_PERIOR within the buddy matching Experimentation Space. As a result, we are gradually developing reusable standards for ethical digitisation.

The Status

With the “Q_Buddy” matching, Q_Perior and CHEMISTREE have realised their first joint practical project. What sets apart the digital matching platform from the multitude of other software solutions used in the company is also its most distinguished feature: The arguments and perspectives of employees, stakeholders and experts were included in the conception and design with equal priority. The platform that is now in use is the result of a participatory development process – what was implemented was the consensus reached by all those involved.

As part of finding the ideal KIDD process, buddy matching was the first model project to succeed. We are inspired to use our previous experience to approach the next common use case: Using AI to precisely identify the potential of employees for specific projects / tasks.

The 1st KIDD conference in May 2022 brought together representatives from society and politics with KIDD project participants on the public stage. The work and previous results of KIDD were discussed in the regulatory and corporate context.

More Project Partners Welcome!

The KIDD Experimentation Space offers companies with their own IT projects in development the chance to try things out on their own: The experience gained and the resulting KIDD process should ultimately be of universal use – the more numerous and diverse the practical projects / experimentation spaces, the better.

You are welcome to talk to us about your own projects and ideas any time: The most direct way is to send an email to CHEMISTREE Managing Director Rosmarie Steininger.

AI Standardisation Roadmap

The federal government, together with DIN and DKE, published the AI Standardisation Roadmapin 2020 Developed with 300 experts from business, science, the public sector and society, the roadmap describes seven key topics of artificial intelligence with their respective status quo, the requirements and challenges as well as the standardisation needs resulting from them. The main goal: to design an action framework for AI standardisation, so that national benchmarks are raised to European and international level and contribute to the trustworthiness and security of AI. The recommended measures provide German suppliers with guidance, transparent/fair competition and technical sovereignty – all in the interest of globally competitive ‘AI made in Germany’.

Focus on Ethical AI

According to the AI Standardisation Roadmap, ‘responsible’ AI puts people at the center of development and application. It is also measured against value-based standards and not only viewed in terms of its technical components. Therefore, non-technical stakeholders and ‘interested parties’ have to be involved in the process. The more complex and critical the AI application, the better – as early as the design and development phase of systems. Standardisation is designed to accompany the development process and to minimise ethical risks in the application.

With the criticality matrix, the standardisation roadmap is a conceptual proposal as to how transparency obligations can be determined depending on the risk classification of an application. A number of outstanding needs for standardisation – such as the question of the operationalisation of ethical values - are listed.

The Participants

The Status

The roadmap results are already being implemented in specific projects to develop norms and standards. The aim is to create lighthouse projects and an implementation initiative for testing and certification of AI systems. As of this year, the AI standardszation roadmap itself is also being developed further. In particular, EU legislation in the form of the Artificial Intelligence Act will significantly define the regulatory framework for the development and use of secure, trustworthy AI applications in Europe. Recommendations from the German AI focus group are also incorporated into the content debate and the legislative process at EU level.